AWS S3: Delete Files Programmatically

Intro

There are times where you will need to delete all of the files in a sub-folder in S3 en mass programmatically. For example if you have a datalake in S3 and you are troubleshooting some Glue transformations on large datasets, you may need to delete hundreds or even thousands of files repeatedly in a specific location, or several locations while you troubleshoot your issues.

Or if I have an application that creates a folder for each customer, if the customer wants to delete items I need to be able to do that programmatically.

We can write a Lambda function to do just that.

The Function

On of the things that makes this a bit tricky is that we cannot simply tell the S3 SDK to delete a specific folder, we must specify every single file that we want to delete. Therefore we need to first list all the files in the location we want to delete them with the listObjects method.

Then we run into another wrinkle. The listObjects method will only return a maximum of 1000 items.

Therefore we also need to check the response of the listObjects method to see if the list has been truncated, which indicates that there are more than 1000 files. We delete the 1000 files we got back, and then run the listObjects method again until the list is no longer truncated.

In addition to these considerations, we want to be able to feed our function an array of folders, as we want to be able to delete items in multiple locations at once without running the function again.

And lastly we want to be able to take into account event variables, such as profile_id so that we can run this function programmatically through an API.

If we take all that into consideration we get something like this:

// This function will delete all the objects listed in the directory_array for a given profile

// Load the AWS SDK for Node.js.

var AWS = require("aws-sdk");

// S3 Client

var s3 = new AWS.S3({ apiVersion: '2006-03-01' });

exports.handler = async (event) => {

async function emptyS3Directory(bucket, dir) {

const listParams = {

Bucket: bucket,

Prefix: dir

};

const listedObjects = await s3.listObjectsV2(listParams).promise();

if (listedObjects.Contents.length === 0) return;

const deleteParams = {

Bucket: bucket,

Delete: { Objects: [] }

};

listedObjects.Contents.forEach(({ Key }) => {

deleteParams.Delete.Objects.push({ Key });

});

s3.deleteObjects(deleteParams, function(err, data) {

if (err) console.log(err, err.stack); // an error occurred

else console.log(data); // successful response

});

if (listedObjects.IsTruncated) await emptyS3Directory(bucket, dir);

}

let bucket = "BUCKET_NAME";

let directory_array = [

`folder/sub-folder/customer-data/profile_id=${event.profile_id}`,

`folder/sub-folder/customer-files/profile_id=${event.profile_id}`,

`folder/sub-folder/customer-pictures/profile_id=${event.profile_id}`,

`folder/sub-folder/customer-videos/profile_id=${event.profile_id}`

];

// loop over directories and delete all their objects

for (let i = 0; i < directory_array.length; i++) {

await emptyS3Directory(bucket, directory_array[i]);

}

};

Final Considerations

You will want to increase the timeout on this Lambda function or else you will constantly timeout.

Lastly, make sure the IAM permissions of this function are very tight and double check that you are pointing it in the right direction, because this is a bit of a bazooka.

Maybe this is a good time to make sure you have point-in-time recovery and backups enabled on your S3 buckets?

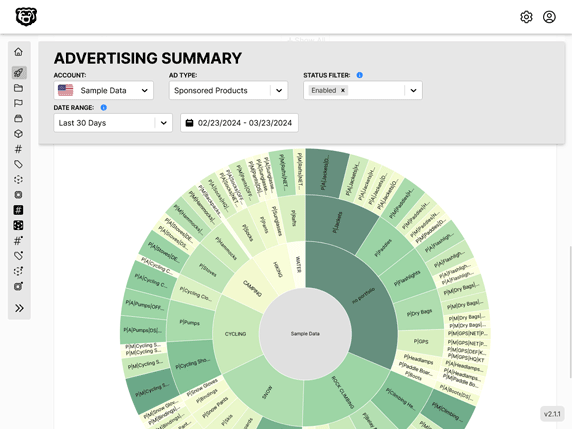

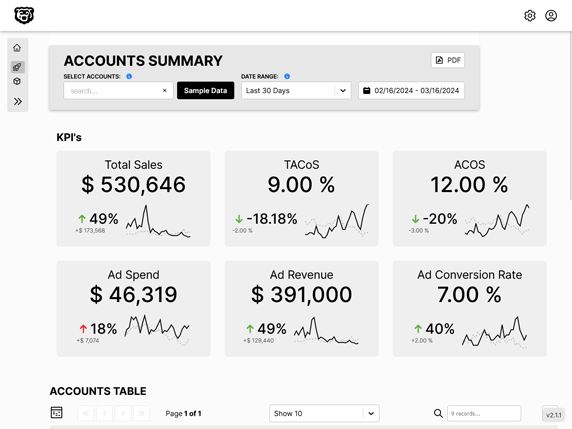

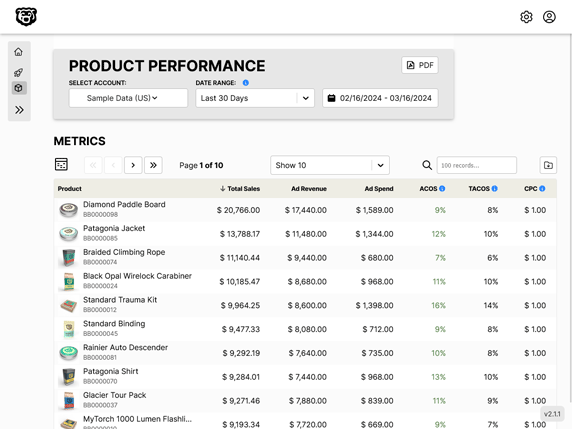

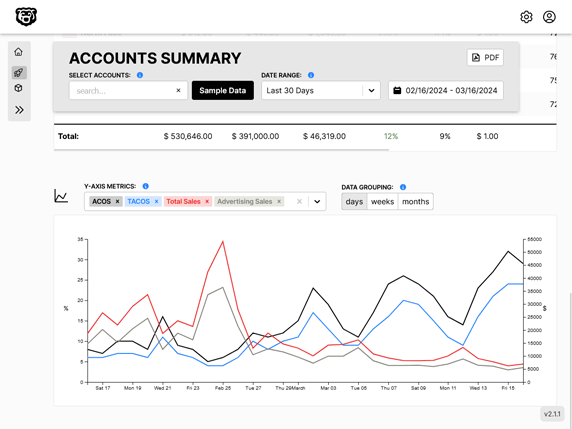

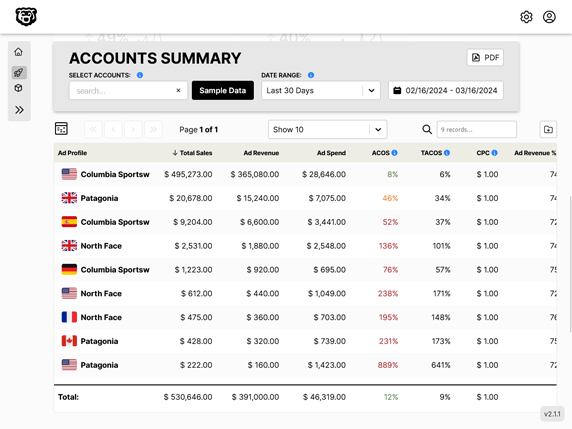

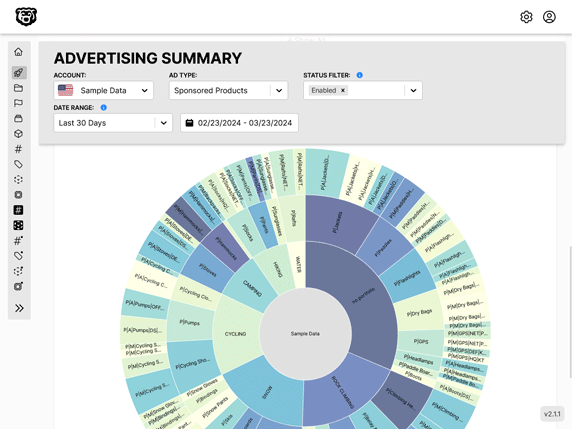

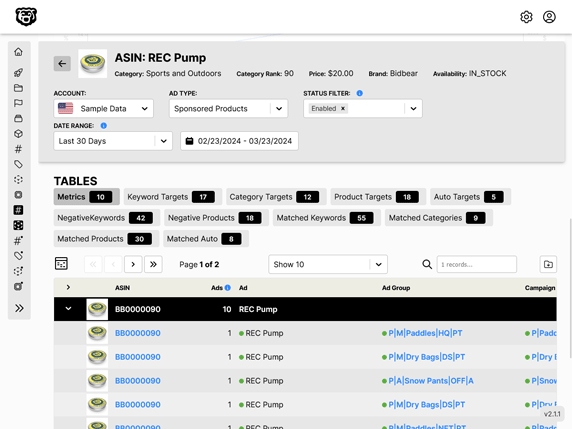

Automated Amazon Reports

Automatically download Amazon Seller and Advertising reports to a private database. View beautiful, on demand, exportable performance reports.