D3 Scales & Heat Map

Intro

In our scatter plot exploration we used a linear scale, which is useful in many cases. However there are many scales available to us that will better serve us in other instances.

Continuous vs Discrete Datasets

Data can generally be grouped into two categories.

- Continuous: Data that can be measured

- Discrete: Data that can be counted

Continuous data can be measured, broken down into fractions or decimals and has infinite possible values.

Discrete data can be counted, cannot be broken down into fractions or decimals. There is a finite amount of values.

Essentially discrete values are integers.

It's important to understand whether your data is continuous or discrete when choosing a scale.

scaleLinear

Input Domain - Continuous Output Range - Continuous

Heatmap

One of the popular methods of displaying discrete data is with a heatmap. Let's make a heatmap with D3.

Let's start with a basic svg where we join an array of integers to a set of squares.

There is going to be a bit of tricky math here to make this all work so let's break it out. Our heatmap is going to be made of boxes, and each box is going to be a 30 x 30 square. Therefore we know that the dimensions of our svg must be a multiple of 30 in each direction. We have set our dimensions to be 600 x 150.

600 / 30 = 20 15 / 30 = 5

So we will fit 20 boxes on the x-axis and 5 boxes on the y-axis.

async function draw(el) {

// Data

const dataset = await d3.json('data.json')

// Dimensions

let dimensions = {

width: 600,

height: 150,

};

const box = 30;

// Draw Image

const svg = d3.select(el)

.append("svg")

.attr("width", dimensions.width)

.attr("height", dimensions.height)

// Rectangles

const squares = svg.append('g')

.classed('squares', true)

.attr('transform', 'translate(2,2)')

.attr("fill", "#ddd")

.attr('stroke', "black")

.selectAll('rect')

.data(dataset)

.join('rect')

.attr('width', box - 3)

.attr('height', box -3)

}

draw('#heatmap1')

And we can see that we have given our squares a little margin of 3 pixels so they aren't touching.

And we successfully have all our squares stacked on each other, as we haven't assigned x,y co-ordinates tied to the data yet. Let's start with the x-coordinates.

// Rectangles

const squares = svg.append('g')

.classed('squares', true)

.attr('transform', 'translate(2,2)')

.attr("fill", "#ddd")

.attr('stroke', "black")

.selectAll('rect')

.data(dataset)

.join('rect')

.attr('width', box - 3)

.attr('height', box -3)

.attr('x', (d,i) => box * (i % 20)) // highlight-line

Here we can see our first example of the second variable that is passed into these attribute functions. The first variable has been d wich is the value of the data in each iteration, and now i which is the number of the iteration. Just like in a for loop.

Then we can see an interesting little modulo trick (i % 20).

This is a clever bit of programming. Typically with modulo you are dividing a larger number by a bigger number, and taking the remainder. However when the first number is smaller, that number is the remainder, as it goes into the second number 0 times. That will happen in this little loop until i=20 at which point the remainder will drop back down to zero, and that will repeat in cycles of twenty, as our heatmap will have 20 "columns". Which gives us this.

And we can handle the y-coordinates like so

// Rectangles

const squares = svg.append('g')

.classed('squares', true)

.attr('transform', 'translate(2,2)')

.attr("fill", "#ddd")

.attr('stroke', "black")

.selectAll('rect')

.data(dataset)

.join('rect')

.attr('width', box - 3)

.attr('height', box -3)

.attr('x', (d,i) => box * (i % 20))

.attr('y', (d,i) => box * (Math.floor(i / 20)))

Or if you are a sadist and you want to learn about bitwise OR operations

// Rectangles

const squares = svg.append('g')

.classed('squares', true)

.attr('transform', 'translate(2,2)')

.attr("fill", "#ddd")

.attr('stroke', "black")

.selectAll('rect')

.data(dataset)

.join('rect')

.attr('width', box - 3)

.attr('height', box -3)

.attr('x', (d,i) => box * (i % 20))

.attr('y', (d,i) => box * ((i / 20) | 0))

Color Scales

Now that we have our grid setup, we can start working on some scales. Let's set it up so that when we call the draw function we give it another variable, which is the scale, so that we can re-use the same function with variable scales. We can make a switch case for the scales, and then replace the previous static fill with our colorScale.

async function draw(el, scale) { // highlight-line

// Data

const dataset = await d3.json('data.json')

// Dimensions

let dimensions = {

width: 600,

height: 150,

};

const box = 30;

// Draw Image

const svg = d3.select(el)

.append("svg")

.attr("width", dimensions.width)

.attr("height", dimensions.height)

// Scales

let colorScale;

switch (scale) {

case 'linear':

colorScale = d3.scaleLinear()

.domain(d3.extent(dataset))

.range(['white','red'])

break

default:

"black"

}

// Rectangles

const squares = svg.append('g')

.classed('squares', true)

.attr('transform', 'translate(2,2)')

.attr('stroke', "black")

.selectAll('rect')

.data(dataset)

.join('rect')

.attr('width', box - 3)

.attr('height', box -3)

.attr('x', (d,i) => box * (i % 20))

.attr('y', (d,i) => box * (Math.floor(i / 20)))

//.attr('fill', (d) => colorScale(d))

// shortens to...

.attr('fill', colorScale)

}

draw('#heatmap1', 'linear') // highlight-line

Now we can easily add some more scales to our switch and explore our options.

Sorting Data

D3 also has methods to interact with the dataset, for example we can sort it.

// Data

const dataset = await d3.json('data.json')

dataset.sort((a,b) => a - b)

Which gives us this:

It's starting to look like a linear scale is not the best representation here. There are just too many shades of red. Instead let us explore a scale that will use a discrete (limit) number of colors to group our data.

Quantize Scale

switch (scale) {

case 'linear':

colorScale = d3.scaleLinear()

.domain(d3.extent(dataset))

.range(['white','red'])

break

case 'quantize':

colorScale = d3.scaleQuantize()

.domain(d3.extent(dataset))

.range(['white','pink','red'])

default:

"black"

}

Quantile and Threshold Scale

async function draw(el, scale) {

// Data

const dataset = await d3.json('data.json')

dataset.sort((a,b) => a - b)

// Dimensions

let dimensions = {

width: 600,

height: 150,

};

const box = 30;

// Draw Image

const svg = d3.select(el)

.append("svg")

.attr("width", dimensions.width)

.attr("height", dimensions.height)

// Scales

let colorScale;

switch (scale) {

case 'linear':

colorScale = d3.scaleLinear()

.domain(d3.extent(dataset))

.range(['white','red'])

break

case 'quantize':

colorScale = d3.scaleQuantize()

.domain(d3.extent(dataset))

.range(['white','pink','red'])

break

case 'quantile':

colorScale = d3.scaleQuantile()

.domain(dataset)

.range(['white','pink','red'])

break

case 'threshold':

colorScale = d3.scaleThreshold()

// domain set manually

.domain([45200,135600])

.range(['white','pink','red'])

break

default:

"black"

}

// Rectangles

const squares = svg.append('g')

.classed('squares', true)

.attr('transform', 'translate(2,2)')

.attr('stroke', "black")

.selectAll('rect')

.data(dataset)

.join('rect')

.attr('width', box - 3)

.attr('height', box -3)

.attr('x', (d,i) => box * (i % 20))

.attr('y', (d,i) => box * (Math.floor(i / 20)))

//.attr('fill', (d) => colorScale(d))

// shortens to...

.attr('fill', colorScale)

}

draw('#heatmap1', 'linear')

draw('#heatmap2', 'quantize')

draw('#heatmap3', 'quantile')

draw('#heatmap4', 'threshold')

The data in these graphs represents household income, and so we are trying to group people into income brackets. So which of these graphs best represents the data? Well if we know that the threshholds that we set manually in the threshold scale come from generally accepted thresholds for low/median/high household income then the threshold scale would be the most accurately, objectively.

The thresholds in the quantile scale were set to break the data into three even groups, so that's exactly what we got. And the quantile scale is very similar to the threshold scale, except the thresholds were chosen automatically by D3. What if we wanted to know what those thresholds were?

switch (scale) {

case 'linear':

colorScale = d3.scaleLinear()

.domain(d3.extent(dataset))

.range(['white','red'])

break

case 'quantize':

colorScale = d3.scaleQuantize()

.domain(d3.extent(dataset))

.range(['white','pink','red'])

console.log("Quantize:", colorScale.thresholds()) // highlight-line

break

case 'quantile':

colorScale = d3.scaleQuantile()

.domain(dataset)

.range(['white','pink','red'])

break

case 'threshold':

colorScale = d3.scaleThreshold()

// domain set manually

.domain([45200,135600])

.range(['white','pink','red'])

break

default:

"black"

}

In all three examples we have transformed continuous data into discrete data.

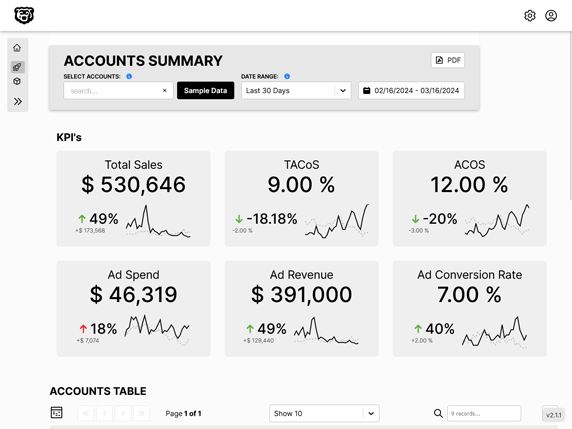

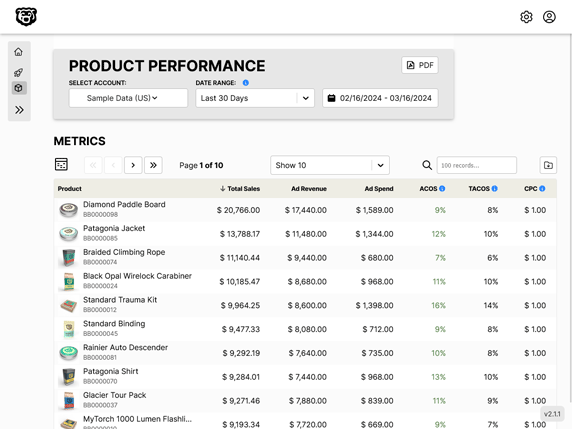

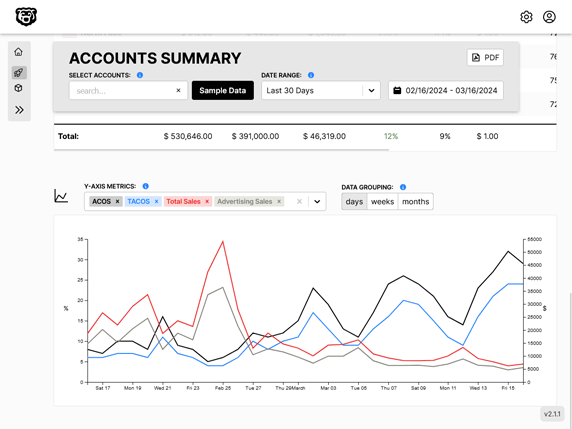

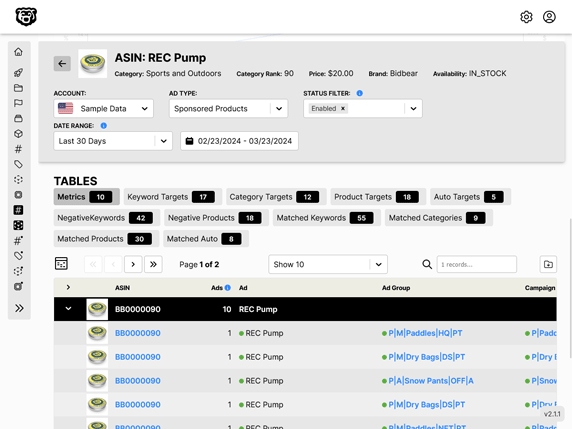

Automated Amazon Reports

Automatically download Amazon Seller and Advertising reports to a private database. View beautiful, on demand, exportable performance reports.